Our Approach to Contributing to and Learning from Evidence

Photo credit: Juhasz Imre from Pexel

What does learning have to do with infrastructure? A lot, apparently.

“Infrastructure” evokes images of highways, power lines, or digital networks – things that connect us to places, people, and information. Boring stuff, for some, or huge public works projects to be closely monitored, for accountability activists. At TAI, we perceive infrastructure as critical to learning – to connect people, ideas, and practice.

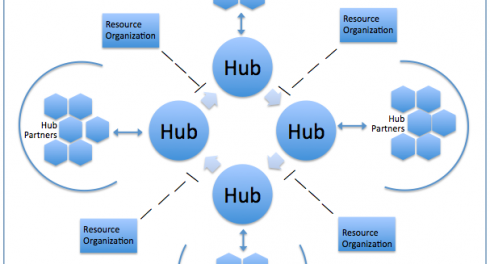

Our current strategy has an explicit learning component, and one of our three learning intermediate outcomes is to develop an adequate infrastructure to support learning across the transparency, accountability, and participation (TAP) development community. TAI aims to provide (infra)structure for our staff and donor members to contribute to and learn from TAP field evidence and experience along with our other key stakeholders – our members’ grantees, other funders, and other implementing and research groups working on TAP goals and outcomes.

What does the learning infrastructure look like in practice?

A recent TAI event provides an example of what this might look and feel like.

Learning Day participants discuss implications of TAI-member commissioned evidence on governance and accountability, citizen voice and government responsiveness, and role of traditional and digital media in accountability.

Evidence comes in all shapes and sizes, and it is a rare occurrence that multiple initiatives that were otherwise not connected produce results around relevant topics at the same time. Such was the case for a body of initiatives that were all funded directly or indirectly by TAI members, and each explored TAP-related outcomes. And so, TAI and the Transparency, Accountability, and Politics group at DFID co-hosted a Learning Day in February, bringing together our members and partners involved in producing this knowledge to explore: (1) the main findings; (2) the implications of this evidence for programmatic design and grant-making practices; and (3) the learnings funders might draw in commissioning and using future TAP evidence.

Where did the learning infrastructure take us?

Here are three key insights I drew from the discussion and participants’ reflections.

- Learning with intentionality – Learning from the evidence is perhaps less difficult than often anticipated, but this practice does take intentionality and some level of resources and skills. And funders and practitioners face many similar challenges in doing this well, from getting the timing right relative to decision-making milestones, to promoting uptake among colleagues – including keeping the fun in learning (as some noted, try serving cake at your next staff learning event!).

- Evidence as a public good – There was a clear value felt around the room in making evidence available to immediate peers, but also to peers outside of our own organization. We touched on various related points, including the use and cataloging of “grey literature”, disseminating evidence through existing or new channels, and intentionally seeking evidence that goes beyond – and even challenges – our own experience and assumptions.

- Continue building the evidence base – And, importantly, we should celebrate the rigor and types of evidence available in the transparency and accountability sector, but we should also be careful not to conclude that there is an excess of usable evidence. This has important implications for the types of learning and evaluation questions that both funders and practitioners pose in their respective work. And hopefully, we can answer those questions by building on, rather than reinventing, the evidence base.

We certainly could have used more time to digest this wealth of knowledge and evidence and to consider more deeply the connection to our own work and practice. Based on our post-event feedback, most participants had immediate intentions to, or had already connected with other participants on content discussed. TAI will continue to support a learning infrastructure to connect people, ideas, and practice around the current and future TAP evidence base, and ultimately to strengthen the TAP field efforts and achievements.

In the coming months, TAI will be releasing a package of evidence reviews on the impact of transparency on non-electoral accountability. In the meantime, we are happy to share below the links to the materials discussed at our Learning Day.

Making All Voices Count

Appropriating Technology for Accountability: Messages from Making All Voices Count, Research Report

DFID Social Accountability Macro Evaluation

Policy Briefing: What works for Social Accountability? Findings from DFID’s Macro Evaluation

BBC Media Action Global Programme

Practice briefing: Doing debate differently: media and accountability

TAI Evidence Reviews by Lily Tsai and Varja Lipovsek

What do We Know About Transparency and Non-Electoral Accountability? Synthesizing Evidence from the Last Ten Years (forthcoming)

How to Learn from Evidence: A Solutions in Context Approach (forthcoming)